In a world flooding with information, how often have you found yourself lost in a sea of data, tirelessly sifting through documents for that one precise answer? Imagine a scenario where you’re poring over lengthy reports, trying to extract specific insights using exact keyword searches. Despite your efforts, the results are often like finding a needle in a haystack—time-consuming and sometimes futile.

Enter the era of Generative Question Answering with Large Language Models (LLMs), a paradigm shift in how we interact with and retrieve information from documents. Moving beyond the limitations of traditional keyword-based searches, Generative QA harnesses the power of LLMs to understand, interpret, and generate responses that are not just accurate but contextually rich. This article explores how this innovative approach is revolutionizing information retrieval, transforming the way we process, search, and derive meaning from vast repositories of unstructured data.

The dawn of Large Language Models like GPT-4 and BERT has ushered in a new era in the field of information retrieval. These models have transcended the limitations of traditional keyword-centric searches, introducing a more intuitive, human-like interaction with data. LLMs are not just about retrieving data based on input queries; they’re about understanding the context, the subtleties of language, and the underlying intent behind each query. This transformative approach allows users to engage in generative question answering (GQA), where complex queries are met with comprehensive, relevant, and accurate answers.

One of the most thought-provoking applications of this technology is in everyday IR systems, similar to how Google search indexes the web to retrieve information based on search terms. Imagine the capabilities of GQA in domains like eCommerce or entertainment – akin to how Netflix recommends TV shows or how Amazon suggests products. These are not mere retrieval tasks; they are complex IR functions that involve creating, storing, and accessing vast data stores. The integration of vector databases and embeddings in these systems enables the efficient retrieval of relevant information, enhancing the user experience significantly.

LLMs in GQA systems represent a leap in generative AI, where the simplest GQA system can construct insightful summaries based on retrieved contexts, making sense of vast amounts of data in ways previously unimagined. This is not just about fine-tuning responses to specific questions; it’s about sculpting a new field of interaction where machines understand and respond in a manner that resonates with human cognition.

The power of LLMs in question answering is not just a faint echo of what is possible; it’s a clear signal of the world-changing potential of generative AI. As we fine-tune these models and their applications, we stand at the threshold of a new era in information retrieval – one that promises more accurate, efficient, and human-like interactions with the vast expanse of data available in the digital world.

Understanding Generative Question Answering

The Basics of Generative QA: How it Differs from Traditional Models and Searches

At its core, generative question answering represents a paradigm shift from traditional models. Unlike the straightforward GQA systems that rely on exact keyword matches, generative models, using vector embeddings and database techniques, interpret the context and nuances of user text queries. This approach allows for a more intuitive, human-like interaction, where the system retrieves relevant information and generates responses that are not just accurate but insightful.

The Role of LLMs in Enhancing QA Capabilities

Large Language Models like OpenAI’s GPT have been game-changers in this field. They take generative QA to the next level, allowing for a deeper understanding of the query and context. LLMs can sculpt human-like interactions, creating answers that resonate with the user’s intent and providing an intelligent and insightful summary based on the retrieved contexts. The most straightforward GQA system requires nothing more than a user’s text query and an LLM. More complex ones will be additionally trained on narrow domain knowledge, be combined with search and recommendation engines, not only to answer to the question but also to link all the relevant sources. With these models, the simplest GQA system transcends traditional search terms and functions, moving towards a more dynamic, responsive, and interactive form of information retrieval.

The Technical Backbone of Generative QA Using Large Language Model

Integration of LLMs in Document Analysis

The generative question answering models can be integrated into the information retrieval system, elevating the process of document analysis. By leveraging LLMs, we can create more dynamic and intuitive systems that not only search for data but understand and interpret it. This integration enables the processing of complex queries across various domains, transforming the way we interact with and retrieve information.

The Process of Generating Answers: From Data to Response

- Document Parsing and Preparation:

- The system starts by loading and parsing documents of different formats, such as text, PDFs, or database entries.

- Documents are then split into manageable chunks. Chunks can be in the size of paragraphs, sentences, or even smaller. NLP packages like NLTK can simplify this task a lot as they provide tools for quick starts, while also handling intricate details like newlines and special characters, enabling engineers to focus on more advanced aspects without worrying about these fundamental but crucial elements

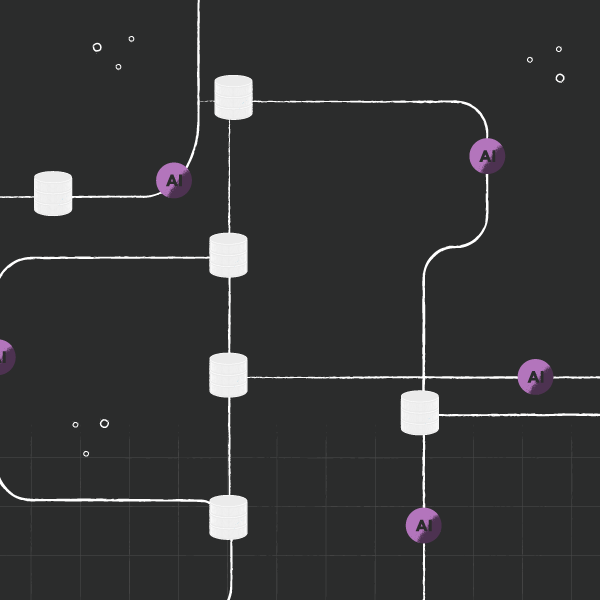

- Text Embedding and Indexing:

- Each chunk of text is converted from character format to numerical vectors, creating embeddings that capture the semantic meaning of the text. These embeddings might use models like

Universal Sentence Encoder (or many of its variants),

DRAGON+ (license: CC BY-NC 4.0.),

Instructor, which can customize embedding based on prompt, or even large language model.

- These embeddings are stored in a vector database, forming a searchable index that facilitates efficient information retrieval. Tools that can be used for this task:

- NumPy - depending on your documents number simply going over all of them and checking linearly if any of them is relevant surprisingly might be a feasible option.

- Faiss - easy to start, multiple possible algorithms for building index was on the market since the early days of semantic search. The downside is its simplicity - you have to filter results on your own, If you need them, you need to implement sharding and replication on your own.

- Elasticsearch/OpenSearch - they are heavy in deployment manner, but take care of not only vector retrieval, but also filtering, sharding, and replication.

- Vector databases like Pinecone or Chroma.

- Query Processing and Context Retrieval:

- When a user poses a query, it is embedded using the compatible model to align with the indexed data. Most often it will be the same model used for encoding, but if you choose a tool that is an asymmetric dual encoder like DRAGON+ it will be another one from a pair.

- The system then retrieves the most relevant text chunks based on similarity search metrics like cosine similarity, creating a context for the answer.

- Answer Generation:

- The LLM, acting as a generative model, uses the retrieved contexts alongside the asked question to generate a response. It calculates the conditional probability of word sequences to formulate an answer that is not only contextually accurate but also insightful.

This step-by-step process highlights how generative QA systems, augmented with LLMs, not only retrieve relevant information but also generate responses that provide a deeper understanding of the query. These systems represent the next generation of information retrieval, where the interaction is akin to a conversation with a knowledgeable entity, offering precise and insightful answers to specific questions.

Practical Applications and Use Cases for Generative Question Answering

Enhancing Customer Support with Automated Responses

Generative QA is transforming customer support by enabling automated, yet highly intuitive and context-aware responses. Leveraging LLMs, customer support systems can now provide answers that are not only accurate but also tailored to the specific nuances of each query. This results in a more efficient customer service experience, with faster response times and a reduction in the reliance on human agents for routine inquiries.

Streamlining Search within Reports and Unstructured Documents

GQA is revolutionizing the search process within organizations, particularly in handling complex internal documents like manufacturing reports, logistics documents, and sales notes. By integrating vector databases into the generative AI core system, these systems efficiently index and retrieve relevant information, ensuring that key points in vast datasets are not missed and provide human-like interaction for employees. This approach enables employees to quickly access the exact information they need, from specific queries on production data to insights in customer care documents. Examples of such use cases can be the following:

- In manufacturing, GQA can quickly sift through production reports to identify key performance indicators or pinpoint issues in the manufacturing process.

- For logistics, these systems can scan through shipping and receiving documents to optimize supply chain operations or locate specific shipment information in real-time.

- In sales and customer care, GQA aids in extracting valuable insights from customer interactions and sales reports, helping in strategy formulation and enhancing customer relationship management.

Knowledge Management for Large Organizations

Large organizations, with their extensive repositories of knowledge and information, are finding generative QA systems particularly beneficial. It provides a platform for constructing a comprehensive index of internal knowledge sources, enabling easy access and retrieval of information. This not only simplifies the process of finding relevant data but also ensures that the insights drawn are based on the complete and latest information available, making it a vital tool in the decision-making process. Whether it’s a query about company policies, historical data, or specific project reports, GQA systems offer an efficient way to manage and retrieve knowledge, aiding in streamlined operations.

Challenges and Considerations for Question Answering Systems

While Generative AI systems offer immense benefits, they also come with their own set of challenges and considerations that need to be addressed for effective implementation.

Addressing Accuracy and Reliability Issues

Accuracy and reliability remain primary challenges in Gen AI Question Answering tools. Ensuring that answers are not only contextually relevant but also factually accurate is paramount. This involves fine-tuning models with quality data and continuously updating them to reflect new information. Moreover, integrating advanced vector embeddings can help improve the precision of retrieved contexts, enhancing the overall reliability of the system.

Mitigating Model Hallucinations

Model hallucinations, where a system generates plausible but incorrect or nonsensical answers, are a significant concern. This requires careful design of the retrieval-augmented GQA system, ensuring that the model correctly interprets input data and queries. Continuous monitoring and updating of the model, along with using reliable information sources for training, are essential steps to mitigate this issue.

High Computing Costs

The computational demands of running large language models are substantial. Vector databases and sophisticated IR systems used in GQA require significant processing power, which can lead to high operational costs. Accessing LLM models via APIs, while convenient, can be costly and challenging to control in terms of budgeting. The costs can escalate quickly, especially for businesses that require extensive data processing. This makes it essential for organizations to strategize and plan their usage of these models, balancing the benefits against the financial impact. Optimizing model efficiency, considering lighter model architectures, and employing efficient data storage and retrieval methods are ways to manage these costs.

Overcoming Data Privacy and Security Concerns with Self-Hosted LLM

In an age where data is a critical asset and sometimes a competitive advantage, concerns around privacy and security are paramount, especially when using LLMs like ChatGPT. Many people use solutions like ChatGPT constantly without considering the privacy or security of their data. For organizations, sharing sensitive or proprietary data through external APIs is not viable. Self-hosted LLMs offer a solution to this dilemma, enabling organizations to leverage the power of generative AI while keeping their data within a controlled environment. This approach not only adheres to privacy standards but also ensures that the competitive advantage and confidentiality of organizational data are maintained. It’s a strategic move to benefit from advanced AI capabilities without compromising on data security and privacy.

Embracing the Next Wave of Question Answering Technology

As we stand at the cusp of a new era in information retrieval, the advent of Generative Question Answering systems, powered by Large Language Models, heralds a significant transformation. These systems, integrating vector databases and sophisticated embeddings, are not just answering specific questions; they’re reshaping the very structure of how we access and interact with information. The simplest GQA system, requiring only a user text query and an LLM, can now generate intelligent and insightful summaries, sculpting a new form of human-like interaction with data.

This shift towards advanced GQA systems presents an opportunity for organizations to revolutionize their approach to data retrieval. nexocode stands ready at the forefront of this transformation. With our expertise in building custom AI-based solutions and implementing self-hosted LLMs, we can help your organization harness the full potential of generative AI. Whether it’s fine-tuning a system for specific domains or creating an application that redefines how you interact with your data, our team is equipped to guide you through this new field.

Are you ready to begin this journey? To explore how our expertise in GQA systems can provide accurate answers and create value from your vast data resources, contact the machine learning experts at nexocode. Let us construct a solution that not only retrieves information but also generates insights, changing the way you interact with your world of data.

Contact nexocode’s AI Experts – Let’s shape the future of Generative AI together.